TL;DR: I think a specific kind of "backward" reasoning, common in conservative religious traditions in the US, is one of the things driving the current crisis.

Long version:.......

First, my experience is with the LDS church, which has spent a few decades trying to be Evangelical enough to be buddies with the actual Evangelicals, and my experiences so far suggest that LDS church members have a lot in common with Evangelical Christians at this point in time, in regards to the issues in this long-ass post.

I was #LDS (i.e., #Mormon) for the first mumblenumbermumble decades of my life. I was taught--expicitly, not by the also-ubiquitous methods of "read-between-the-lines", "pay attention to consequences instead of words," etc.--that the right and proper way to #reason about all things religious was thus:

- Find out what is true

- Use all resources after that to support, justify, explain, and believe that truth

The first point is a problem, of course, because it comes before any external evidence. There is #evidence of a kind, but it is 100% subjective: the results of your #spiritual promptings or feelings or inspiration. You get these by praying really hard, thinking the right thoughts, etc. Thinking "negative" thoughts (often any kind of skepticism or doubt is included in this category) will drive the Holy Spirit away and he won't be able to tell you how true all the stuff is.

There are many people--and I truly believe they are almost all sincere and well-meaning--to help you navigate this difficult process. This means to help you come to the right conclusions (i.e., that Jesus is God and died for our sins, that Joseph Smith was His prophet, that the LDS church is the only true #church etc.). Coming to the "wrong" conclusions means you aren't doing it right, hard enough, humbly enough, etc. so you will keep at it, encouraged by family, friends, and leaders, until you get the "right" answer.

See, you make up your mind about the truth of things before acquiring any outside #evidence. I was a full-time missionary in Mexico for two years; I am aware that of course evidence does get used, but not the way a scientist or other evidence-informed person would use it. We used scriptures, #logic, personal stories, empirical data, etc. as merely one of many possible tools to bring another soul to Christ. LDS doctrine is clear on this (where its notably unclear on a huge range of other things): belief/#faith/testimony does not come from empirical evidence. It comes from the Holy Spirit, and only if you ask just right.

Empirical evidence, clear reasoning, etc. are nice but they're just a garnish; they're only condiments. The main meal is promptings (i.e., feelings) from the Holy Spirit. That is where true knowledge comes from. All other #knowledge is inferior and subordinate. All of it. If the Holy Spirit tells you the moon is cheese, then by golly you now have a cheesemoon. More disturbingly, if the Holy Ghost tells you to kill your neighbors, you should presumably do that. This kind of "prepare for the worst" thinking is a lot more common in conservative Christian groups than I think some people realize.

Anyway, you get these promptings. They're probably not because you're a sleep-deprived, angsty, sincere teenager who has been bathed and baked in this culture your entire life and has no concept of any outcome other than this. You get the promptings. Now you know. You know that Jesus is your Savior, that Joseph Smith was his prophet, that the LDS Church is the only true and living church on the face of the etc. etc.

You don't believe; you know.

So the next step is... nothing specific, really. You're done learning. That step is over. As we were reminded repeatedly as young missionaries: your job is to teach others, not to be taught by them. You go through the rest of your life with this knowledge, and you share it whenever you can. Of course, some events and facts and speech might make you doubt your hard-won knowledge. What to do?

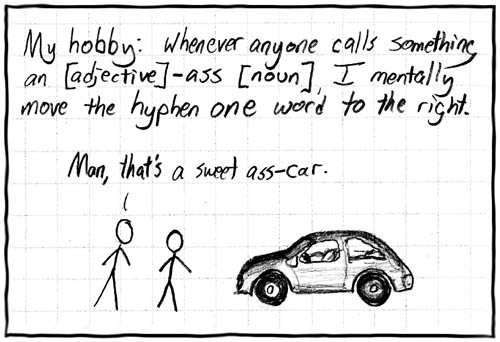

You put the knowledge first and make the #facts fit it. You arrange the facts you see or read or whatever so that they fit this knowledge you acquired on your knees late at night with tears in your eyes, or in Sacrament Meeting the morning after a drama-filled youth conference. If you can't make the facts fit your knowledge, you reject the facts.

You seriously reject facts, and pretty casually. You might decide they aren't facts, or you might get really interested in the origin of anidea so you can discredit it, etc. Some people reject the theory of evolution. Others reject a history in in which many of the founding fathers of the USA were #atheist, #agnostic, or Not Very Good People. You can reject anything, really. You can reject the evidence of your eyes and ears, as the Party demands. It's kind of easy, in fact.

Millions of people think like this: they explicitly reject information that does not fit the narrative they have acquired through a process that depended 100% on subjective experiences (and, afterward, is heavily dominated by "authority figures" and trusted friends who tell you what to believe this week).

As a psychologist, even though #DecisionScience is not my area of research, I can tell you various ways in which one's #subjective experience can be manipulated, especially with the support of a life-saturating religious worldview and community. Relegating facts to a supporting role (at best) means giving all kinds of biases free rein in influencing your views. Facts were one of the things that might have minimized that process. In fact, I think facts as correctives for human biases was a main motivation underlying the development of the #ScientificMethod.

This becomes how you live your life: find out what's true, then rearrange your worldview, your attitudes, your specific beliefs, your behavior, and potentially even how you evaluate evidence to fit that knowledge. You aren't faking it, you aren't pretending; you simply believe something different. You see the world differently. I'm guessing you'd pass a lie detector test.

Note that nowhere in this process is there ever what a #philosopher, a #scientist, or a mathematician would call an "honest, open inquiry." That would imply uncertainty about the outcome of the inquiry. It would imply a willingness to accept unexpected answers if the evidence or reasoning led there. That's not possible because there can be only one answer: what you already know. Evidence cannot be allowed to threaten knowledge.

Coincidentally, now you're a perfect member of the Trump/Musk/whoever personality cult. All you need are some trusted sources (e.g. friends, neighbors, celebrities, local church leaders) to tell you that #Trump is a Good #Christian, that #AOC is secretly a communist, that #Obama was born in Africa, that Killary is literally eating babies, that a pizza parlor has a torture basement, that Zelensky is a villain and Putin a hero, etc. Literally anything. You haven't just learned how to do this; it is how your brain works, now. This is how "reasoning" happens. This is how belief and worldview and personal commitment are formed and shifted.

Now you casually accept new concepts like "crisis actors", "alternative facts", the "deep state", and "feelings-based reality." You have no problem doing this. Conspiracy theories are a cakewalk; you could fully believe six impenetrable Qanon ravings before breakfast.

I've seen progressives casually assume that Evangelical-type Christians are hypocrites, or lying, or "virtue signaling" as they state their support for whatever value-violating thing Trump or Musk or any national GOP figure has said or done (e.g., "Hey, I now believe that god doesn't love disabled people, after all!"). I've accused conservatives of those things things myself, though I don't actually believe that's what is happening. What we're seeing is not just hypocrisy or dishonesty. What's happening, at least with many religious people, is that a trusted leader has told them they should believe a different thing, so now they do. It's that simple. Many might even die for their new belief in the right circumstances (certain Christians are a little bit obsessed with the possibility of dying for their faith, so this isn't as high a bar as you might think).

Sure, some people who flipflop overnight probably are lying or putting on an act even they don't truly believe. However, many more are simply being who they are, or who they've become by existing in this ideological/cultural system for years.

Obviously, I believe this kind of reasoning is not good and makes the world a better place. I would like to reduce it or even eliminate it. It is embedded, though, with other dynamics: ingroup/outgroup tribalism, authoritarianism (boy howdy do conservative churches train you to be an authoritarian), prejudices of various kinds, and basic cognitive biases (which run rampant in such environments).

It's also bound up with religious #AntiIntellectualism. In the LDS church, for instance, there's a scripture that gets tossed around at election time saying that being educated is good, but only up to a point (any education that leads a person to question God's words, etc. is by definition too much" learning). As a person with a graduate degree, my last decade or so in the LDS church was marked by a more or less constant social tension from the possibility that I might "know too much".

Education reliably reduces this problematic kind of thinking/believing system in many people. Specifically, "liberal arts" education (which isn't about liberalism or necessarily arts) is the special sauce; the classes many students will be forced to take for "general education" at most US universities are pretty good at teaching students different ways of thinking and helping them try on alternative worldviews. Many of the people learning multiple worldviews and getting some tools for reasoning and evidence, etc. tend to use them for the rest of their lives. Even truly exploring one or two wrong alternative worldviews or thinking patterns tends to yield big rewards over time. Notably, the GOP's attacks on higher ed have become much worse, recently.

Anyway, this is (IMO) what progressives are up against in the USA. It is not just that some people believe different things; it's that many of those people have entirely different cognitive/emotional/social structures and processes for how belief happens and what it means.

Undoing this will take generations. In the meantime, I encourage pushing back on conservative flip-flops. No matter what, not even Evangelical congressmen want to look inconsistent. Even the evangelicalest of Christians will sometimes engage with facts and reasoning to some degree, and pressure simply works, sometimes. Keep your expectations for personal change low, however.