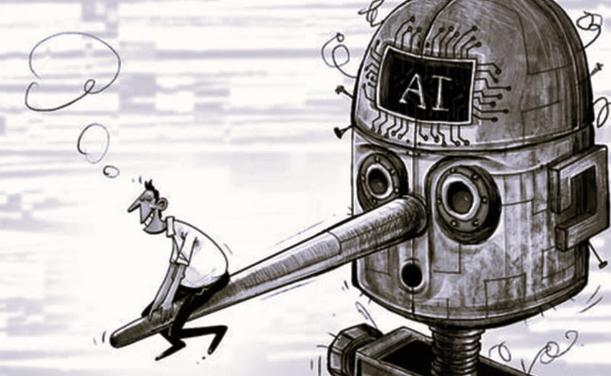

ChatGPT accidentally exposed OpenAI's deceptive business model: "GPT-5 is often just routing prompts between GPT-4o and o3."

Corporate AI marketing manufactures technological breakthroughs to extract premium pricing from commoditized infrastructure.

Classic Silicon Valley grift: rebrand existing products, multiply prices, rely on information asymmetry to exploit customers.